Looking at the logs it’s been down since October 22, 2023. I’m not sure if that is when my fiber IP changed, or when the line from the fiber jack to the router in the basement broke. The line quite working right before I had to work from home, so I just put the wifi router directly into the fiber jack so I could work, and I just yesterday got around to fixing that. I ended up just replacing RJ45 connectors on both ends of the cable.

After the box was back on the internet, I updated my current public IP with namecheap and started running updates on Ubuntu. I didn’t realize when you run ‘apt upgrade’ that it will move you to the latest LTS version, so I spent about half an hour figuring out why Apache would not start. Turns out one of the several warning messages I clicked through was telling me it was going to install PHP 8.1, and remove 7.6. Since I made sure to tell it not to update any of my config files, this caused Apache to crash when trying to start. I just had to disable the old module and enable the new one.

So the site is back up but just a quick run through certbot and it’s secure again too!

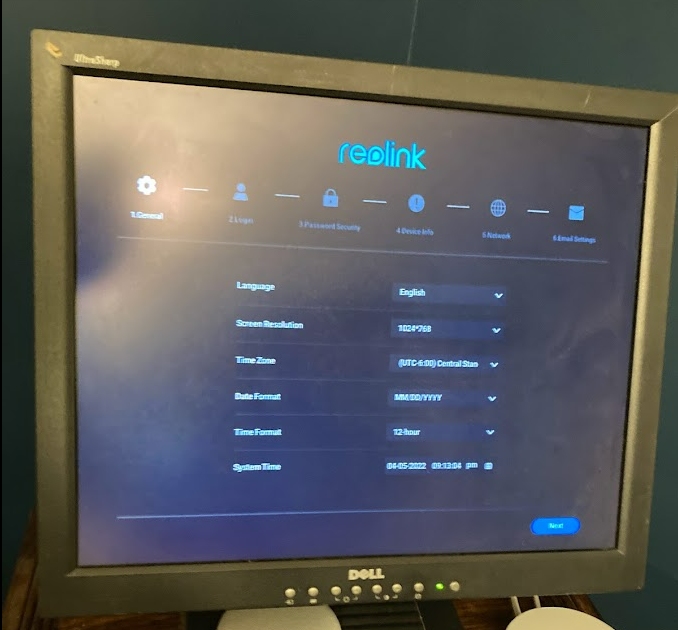

All this is in preparation for me to setup Apache Guacamole on the site. This should allow be to get around all the filtering at work so I can remote into my home computer and work on things I can’t on my work PC. My next post will probably be about setting that up. It i fairly complicated. No prebuilt apt packages.